Strategies to Cut Your Web Scraping Costs

Cut your web scraping costs without sacrificing performance is entirely possible with the right tools and strategy. In today’s data-driven world, web scraping powers everything from competitive intelligence and pricing automation to market research and lead generation. However, the more data you collect, the more it can eat into your budget if you’re not careful. This article walks you through practical methods to optimize your scraping efforts so you can gather the data you need while minimizing costs. Expect to learn how web scraping pricing works, what factors affect scraping costs, and how to use features like auto rotation and residential proxies with unlimited bandwidth to your advantage.

Understanding the Key Cost Factors in Web Data Collection

The cost of data collection is influenced by several factors, including the complexity of the data, website restrictions, and the frequency of extraction. Being aware of these elements can help in devising a cost-effective data strategy.

1️⃣ Data Complexity

Modern websites are not as simple as they used to be. Many utilize JavaScript-heavy frameworks to load content dynamically, making traditional scraping techniques less effective. Websites with deeply nested data structures require more advanced tools, increasing computational costs. Additionally, large datasets that require frequent updates add to storage, bandwidth, and processing expenses.

2️⃣ Site Restrictions

Websites often implement multiple restrictions to prevent automated data collection, including:

- Rate Limiting: Many sites limit the number of requests per minute from a single IP, resulting in potential bans if the limit is exceeded.

- CAPTCHAs: These challenge-response tests slow down automation and require solving services, which adds extra costs.

- IP Blocking: Many websites blacklist specific IPs to prevent bots from accessing their content.

Overcoming these restrictions requires robust proxy solutions that ensure uninterrupted data collection without detection.

3️⃣ Cost Estimation

Before beginning any large-scale data-gathering operation, estimating costs is essential. Several factors contribute to overall expenses, including:

- Volume of Data: The more data you collect, the more you pay for bandwidth, storage, and processing power.

- Frequency of Scraping: Higher scraping frequencies lead to increased operational costs.

- Website Behavior: Some websites are designed to be scrape-resistant, demanding more resources to bypass restrictions.

An accurate estimation of these factors helps organizations determine whether it is more efficient to build their own data pipelines or leverage prebuilt datasets.

Use Residential Proxies to Avoid Blockages and Repeat Requests

One hidden cost in web scraping is getting blocked and having to retry the same request multiple times. This not only wastes bandwidth but can inflate costs. Residential proxies with automatic rotation offer a cost-effective solution. They mimic real user behavior, reducing the chance of getting blocked. Providers that offer unlimited bandwidth help you make the most of your scraping sessions without worrying about overage fees. This is especially helpful for large-scale projects where every retry costs money.

Cut Your Web Scraping Costs With Smart Rotation Strategies

IP rotation is essential for bypassing scraping protections, but not all rotation systems are equal. Using a provider that offers smart auto rotation will reduce the chances of failed requests and help you use fewer resources. This, in turn, lowers both scraping costs and time-to-data. Manual proxy rotation or using a static pool might be cheaper upfront but can result in higher retry rates and wasted bandwidth. Opting for services that offer global coverage allows you to rotate IPs by region, increasing both accuracy and cost-efficiency.

Optimize Requests and Avoid Redundant Data Collection

Not every page or element on a website needs to be scraped. Optimizing the structure of your HTTP requests, removing unnecessary headers, or switching to APIs where available can drastically reduce scraping costs. Many developers unknowingly waste resources by downloading large pages or media files when only a snippet of data is needed. Consider breaking scraping into focused segments and using queue systems to prioritize valuable URLs first. Efficient data parsing and transformation also reduce the need for reprocessing and storage, both of which add to cost.

How Unlimited Bandwidth Helps Lower Your Budget

Proxy providers that offer unlimited bandwidth allow you to scrape more predictably. Fixed pricing models are particularly useful for teams or businesses running constant scraping tasks. By choosing unlimited residential proxies, you remove the uncertainty associated with volume-based billing. This also makes budgeting simpler and allows your developers to scale up operations without waiting for cost approvals. The benefit extends to testing and debugging phases too, where bandwidth is often consumed at unpredictable rates.

Why Global Coverage Matters When Scraping at Scale

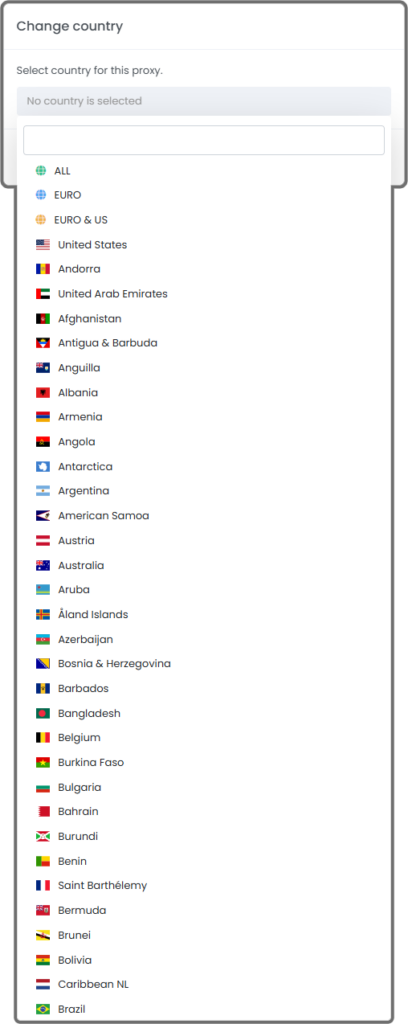

If your scraping targets are distributed globally, it makes sense to use a proxy network that matches that scope. Global coverage ensures that your scraping efforts do not suffer from geoblocks or latency issues. More importantly, scraping from IPs that are close to the source regionally reduces failure rates and boosts accuracy. Providers offering rotating residential proxies with endpoints in multiple countries can reduce costs by reducing the number of retries and incorrect responses due to location-based content.

Leverage Proxy Rotation Tools with Cost in Mind

Some scraping platforms bundle proxy management, rotation, and retry logic into a single service. These tools are not just about convenience, they are about optimizing performance to reduce the total cost of ownership. When you calculate scraping costs, it’s important to factor in the hidden costs of managing infrastructure, maintaining IP hygiene, and handling bans. Tools that offer integrated auto rotation, session control, and region selection streamline your scraping logic and reduce wasteful code and compute resources.

How ProxyTee Helps You Cut Your Web Scraping Costs

ProxyTee is a proxy solution that offers unlimited bandwidth, residential proxies, and automatic rotation as part of its service. It is specifically designed to optimize scraping costs for teams and businesses operating at scale. With global coverage and user-friendly configuration, it removes the need for complex setups while ensuring stable access to target data sources. ProxyTee stands out with its fixed pricing model, making it easier to plan your scraping budget ahead of time. Whether you’re targeting local search results or aggregating product listings, it provides a reliable and cost-efficient backbone.

Key Factors to Compare When Choosing a Proxy for Cost Optimization

Here are some important aspects to compare when choosing a proxy provider for scraping:

- Browser and tool support: Ensure compatibility with your preferred scraping tools or headless browsers. Some proxies are optimized for Puppeteer or Selenium.

- Language ecosystem: Check if the service has client libraries for Python, Node.js, or others to reduce development time.

- Setup complexity: Look for providers with simple integration options like endpoint-based access or preconfigured scripts.

- Speed and reliability: Speed affects how much data you can pull per minute, and reliability ensures fewer failures.

- Community and documentation: Well-documented APIs and active support communities reduce the cost of trial and error.

Make Your Budget Work Harder With Efficient Scraping

Ultimately, cutting your web scraping costs is not about limiting how much data you collect, but about collecting it smarter. Start by using residential proxies with auto rotation, which are essential for bypassing detection systems without retries. Choose services that offer unlimited bandwidth to remove unpredictable pricing from your workflow. Make use of global coverage to scrape region-specific data with less resistance. Finally, always refine your requests and parsing logic to prevent data waste. Efficient scraping is a result of mindful planning, smart tools, and clear cost awareness. The strategies shared here will help you build a resilient, cost-effective scraping pipeline that delivers results without breaking the bank.