Web Scraping for Machine Learning with ProxyTee

Web scraping’s importance has surged, becoming essential across various businesses. Its ability to automate, deliver quick results, provide cost-effectiveness, and drive data-based market analysis underscores its growing necessity. But how will machine learning (ML) influence data scraping techniques?

Understanding Machine Learning

Machine learning, a core part of data science, mimics human learning by using algorithms to analyze data. This approach automates processes, requiring minimal manual coding from developers. It applies to many areas such as:

- Customer Service: AI-powered chatbots are taking over customer service, providing instant answers to common queries.

- Web Unblocking: AI and ML driven proxy solutions that enable smooth data gathering without blocks and errors.

- Computer Vision: Machine learning extracts insights from visual data, enabling recognition tasks like those in self-driving cars.

- Stock Trading: Automated trading powered by algorithms optimizes stock portfolios.

Web Scraping’s Crucial Role in Machine Learning

Web scraping is vital for gathering the high-quality data needed for machine learning. Although internal data can be useful, it is limited. Scraping external sources is vital to gather data points that are more comprehensive and can deliver better results. This is why the need for more sophisticated tools for data gathering is growing.

In this post, we’ll explore how to combine web scraping and machine learning to analyze stock prices, using ProxyTee to make data gathering process smoother and quicker.

From Web to Model: Data Preparation in Action

1️⃣ Project Setup and Requirements

We will use Python 3.9, along with the following libraries:

- Web scraping: Requests-HTML and BeautifulSoup4

- Machine learning: Pandas, Numpy, Matplotlib, Seaborn, SciKit Learn, and Tensorflow.

Install the required libraries:

$ python3 -m pip install requests_html beautifulsoup4

$ python3 -m pip install pandas numpy matplotlib seaborn tensorflow scikit-learn keras2️⃣ Data Extraction and Preparation

We will use Jupyter Notebook to execute and demonstrate code and graphs.

First, import the libraries:

from requests_html import HTMLSession

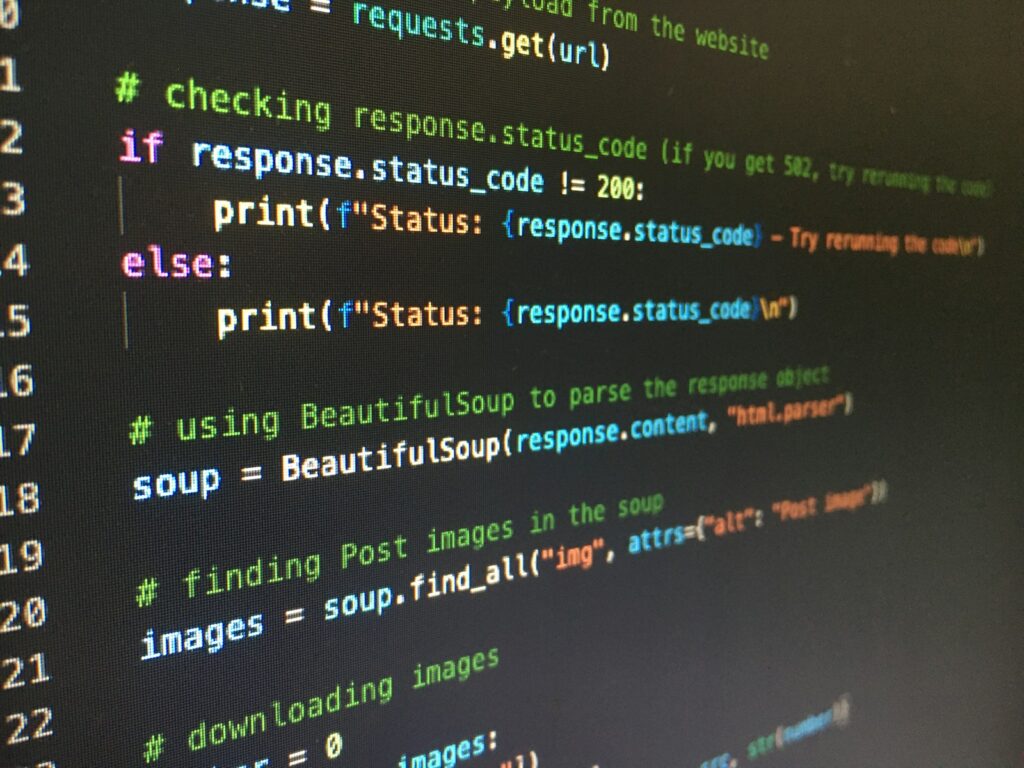

import pandas as pdUse Requests-HTML to extract the HTML from the target webpage.

url = 'https://finance.yahoo.com/quote/AAPL/history?p=AAPL&guccounter=1&period1=1556113078&period2=1713965616'

session = HTMLSession()

r = session.get(url)Using XPath, extract the necessary data into a list of dictionaries. Here’s the full Python code:

rows = r.html.xpath('//table/tbody/tr')

symbol = 'AAPL'

data = []

for row in rows:

if len(row.xpath('.//td')) < 7:

continue

data.append({

'Symbol': symbol,

'Date': row.xpath('.//td[1]/text()')[0],

'Open': row.xpath('.//td[2]/text()')[0],

'High': row.xpath('.//td[3]/text()')[0],

'Low': row.xpath('.//td[4]/text()')[0],

'Close': row.xpath('.//td[5]/text()')[0],

'Adj Close': row.xpath('.//td[6]/text()')[0],

'Volume': row.xpath('.//td[7]/text()')[0]

})

df = pd.DataFrame(data)Convert this list of dictionaries to a Pandas DataFrame to store the collected data.

3️⃣ Cleaning Data for Machine Learning

Data cleaning is necessary before training the model.

- First, convert the ‘Date’ column to DateTime format.

- Then, convert numeric columns from strings to float.

- Remove commas in the values.

- Drop missing values.

- Set date as the index column of DataFrame.

df['Date'] = pd.to_datetime(df['Date'])

str_cols = ['High', 'Low', 'Close', 'Adj Close', 'Volume']

df[str_cols] = df[str_cols].replace(',', '', regex=True).astype(float)

df.dropna(inplace=True)

df = df.set_index('Date')

df.head()4️⃣ Data Visualization

To see a trend in price data, let’s first visualize the closing stock price of Apple.

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_style('darkgrid')

plt.style.use('ggplot')

plt.figure(figsize=(15, 6))

df['Adj Close'].plot()

plt.ylabel('Adj Close')

plt.xlabel(None)

plt.title('Closing Price of AAPL')

plt.show()5️⃣ Preparing Data for Machine Learning Model

We choose to use ‘Open’, ‘High’, ‘Low’, ‘Volume’ as the training set features and ‘Adj Close’ as the variable to be predicted.

features = ['Open', 'High', 'Low', 'Volume']

y = df.filter(['Adj Close'])

from sklearn.preprocessing import MinMaxScaler

scaler = MinMaxScaler()

X = scaler.fit_transform(df[features])Split data into training and testing data, using TimeSeriesSplit, then reshape it for use with LSTM networks.

from sklearn.model_selection import TimeSeriesSplit

tscv = TimeSeriesSplit(n_splits=10)

for train_index, test_index in tscv.split(X):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

X_train = X_train.reshape(X_train.shape[0], 1, X_train.shape[1])

X_test = X_test.reshape(X_test.shape[0], 1, X_test.shape[1])6️⃣ Training the Model

Build a model using sequential and Dense layers with LSTM:

from keras.models import Sequential

from keras.layers import LSTM, Dense

model = Sequential()

model.add(LSTM(32, activation='relu', return_sequences=False))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(X_train, y_train, epochs=100, batch_size=8)Now that we have finished training the model, we are going to use this for a prediction and then compare both predicted value against the original.

y_pred= model.predict(X_test)

plt.figure(figsize=(15, 6))

plt.plot(y_test.values, label='Actual Value')

plt.plot(y_pred, label='Predicted Value')

plt.ylabel('Adjusted Close (Scaled)')

plt.xlabel('Time Scale')

plt.legend()

plt.show()This shows the prediction is generally similar to the actual stock trends.

7️⃣ Full Code:

from requests_html import HTMLSession

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.preprocessing import MinMaxScaler

from sklearn.model_selection import TimeSeriesSplit

from keras.models import Sequential

from keras.layers import LSTM, Dense

url = 'https://finance.yahoo.com/quote/AAPL/history?p=AAPL&guccounter=1&period1=1556113078&period2=1713965616'

session = HTMLSession()

r = session.get(url)

rows = r.html.xpath('//table/tbody/tr')

symbol = 'AAPL'

data = []

for row in rows:

if len(row.xpath('.//td')) < 7:

continue

data.append({

'Symbol': symbol,

'Date': row.xpath('.//td[1]/text()')[0],

'Open': row.xpath('.//td[2]/text()')[0],

'High': row.xpath('.//td[3]/text()')[0],

'Low': row.xpath('.//td[4]/text()')[0],

'Close': row.xpath('.//td[5]/text()')[0],

'Adj Close': row.xpath('.//td[6]/text()')[0],

'Volume': row.xpath('.//td[7]/text()')[0]

})

df = pd.DataFrame(data)

df['Date'] = pd.to_datetime(df['Date'])

str_cols = ['High', 'Low', 'Close', 'Adj Close', 'Volume']

df[str_cols] = df[str_cols].replace(',', '', regex=True).astype(float)

df.dropna(inplace=True)

df = df.set_index('Date')

df.head()

sns.set_style('darkgrid')

plt.style.use('ggplot')

plt.figure(figsize=(15, 6))

df['Adj Close'].plot()

plt.ylabel('Adj Close')

plt.xlabel(None)

plt.title('Closing Price of AAPL')

plt.show()

features = ['Open', 'High', 'Low', 'Volume']

y = df.filter(['Adj Close'])

scaler = MinMaxScaler()

X = scaler.fit_transform(df[features])

tscv = TimeSeriesSplit(n_splits=10)

for train_index, test_index in tscv.split(X):

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y.iloc[train_index], y.iloc[test_index]

X_train = X_train.reshape(X_train.shape[0], 1, X_train.shape[1])

X_test = X_test.reshape(X_test.shape[0], 1, X_test.shape[1])

model = Sequential()

model.add(LSTM(32, activation='relu', return_sequences=False))

model.add(Dense(1))

model.compile(loss='mean_squared_error', optimizer='adam')

model.fit(X_train, y_train, epochs=100, batch_size=8)

y_pred= model.predict(X_test)

plt.figure(figsize=(15, 6))

plt.plot(y_test.values, label='Actual Value')

plt.plot(y_pred, label='Predicted Value')

plt.ylabel('Adjusted Close (Scaled)')

plt.xlabel('Time Scale')

plt.legend()

plt.show()Conclusion

This post demonstrated how ProxyTee can be used together with web scraping and machine learning to forecast stock prices. With ProxyTee, we can guarantee a consistent access and performance for this important process.

Our Unlimited Residential Proxies, which offers rotating residential IPs, along with unlimited bandwidth and global coverage are crucial components to ensure smooth and reliable web scraping experiences. Also, auto rotation helps in avoiding any detection or blocks when gathering data from different websites. The simple API further enables easy integration of these services to different applications and workflows.

For those looking to efficiently scrape web data, ProxyTee provides robust solutions. From our affordable pricing to various Residential Proxy and Datacenter Proxy options, we can accommodate a range of requirements and tasks.